Kerala Plus One Computer Science Notes Chapter 1 The Discipline of Computing

Summary

Computing milestones and machine evolution:

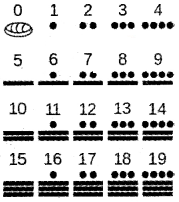

People used pebbles and stones for counting earlier days. They draw lines to record information. eg: 1 line for one, 2 lines for two, 3 lines for three, etc. In this number system the value will not change if the lines are interchanged. This type of number system is called non-positional number system.

Counting and the evolution of the positional number system:

In positional number system, each and every number has a weight. Earlier sticks are used to count items such as animals or objects. Around 3000 BC the Egyptians use number systems with radix 10(base-the number of symbols or digits used in the number system) and they write from right to left.

You can also read numpy where with examples.

Later Sumerian/Babylonian use number system with largest base 60 and were written from left to right. They use space for zero instead of a symbol, 0. In 2500 BC, the Chinese use simple and efficient number system with base 10 very close to number system used in nowadays.

In 500 BC, the Greek number system known as Ionian, it is a decimal number system and used no symbols for zero. The Roman numerals consists of 7 letters such as l, V, X, L, C, D, M. The Mayans used number system with base 20 because of the sum of the number of fingers and toes is 10 + 10 = 20.

It is called vigesimal positional number system. The numerals are made up of three symbols; zero (shell shape, with the plastron uppermost), one (a dot) and five (a bar or a horizontal line). To represent 1 they used one dot, two dots for 2, and so on

The Hindu – Arabic number system had a symbol(0)for zero originated in India 1500 years ago. Consider the table to compare the number system

| Roman Numerals | Decimal / Hindu – Arabic number |

| I | 1 |

| V | 5 |

| X | 10 |

| L | 50 |

| C | 100 |

| D | 500 |

| M | 1000 |

Evolution of the computing machine:

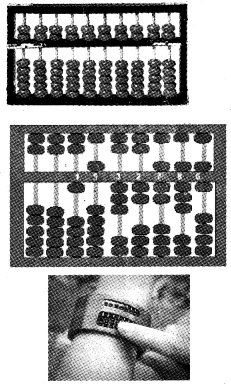

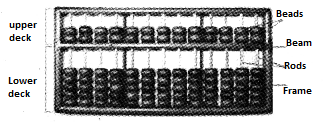

(a) Abacus:

In 3000 BC Mesopotamians introduced this and it means calculating board or frame. It is considered as the first computer for basic arithmetical calculations and consists of beads on movable rods divided into two parts. The Chinese improved the Abacus with seven beads on each wire. Different Abacus are given below.

(b) Napier’s bones:

A Mathematician John Napier introduced this in AD 1617.

(c) Pascaline:

A French mathematician Blaise Pascal developed this machine that can perform arithmetical operations.

(d) Leibniz’s calculator:

In 1673, a German mathematician and Philosopher Gottfried Wilhelm Von Leibniz introduced this calculating machine.

(e) Jacquard’s loom:

In 1801, Joseph Marie Jacquard invented a mechanical loom that simplifies the process of manufacturing textiles with complex pattern. A stored program in punched cards was used to control the machine with the help of human labour. This punched card concept was adopted by Charles Babbage to control his Analytical engine and later by Hollerith.

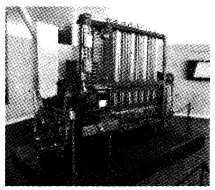

(f) Difference engine:

The intervention of human beings was eliminated by Charles Babbage in calculations by using Difference engine in 1822. It could perform arithmetic operations and print results automatically

(g) Analytical engine:

In 1833. Charles Babbage introduced this. Charles Babbage is considered as the “Father of computer It is considered as the predecessor of today’s computer. This engine was controlled by programs stored in punched cards. These programs were written by Babbage’s assistant, Augusta Ada King, who was considered as the first programmer in the World.

(h) Hollerith’s machine:

In 1887, Herman Hollerith an American made first electromechanical punched cards with instructions for input and output. The.card contained holes in a particular pattern with special meaning. The Us Census Bureau had large amount of data to tabulate, that will take nearly 10 years.

By this machine this work was completed in one year. In 1896, Hollerith started a company Tabulating Machine Corporation. Now it is called International Business Machines(IBM).

(i) Mark-1:

In 1944 Howard Aiken manufactured automatic electromechanical computer in collaboration with engineers at IBM that handled 23 decimal place numbers and can perform addition, subtraction, multiplication and subtraction.

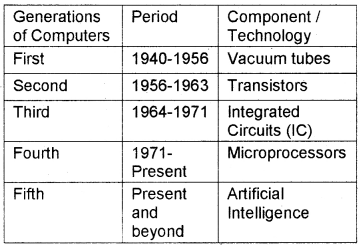

Generations of computers:

There are five generations of computers from 16th century to till date.

First generation computers (1940 – 1956):

Vacuum tubes were used in first generation computers. The input was based on punched cards and paper tapes and output was displayed on printouts. The Electronic Numerical Integrator and Calculator(ENIAC) belongs to first generation was the first general purpose programmable electronic computer built by J. Presper Eckert and John V. Mauchly.

It was 30-50 feet long, weight 30 tons, 18,000 vacuum tubes, 70,000 registers, 10,000 capacitors and required 1,50,000 watts of electricity. It requires Air Conditioner. They later developed the first commercially successful computer, the Universal Automatic Computer(UNIVAC) in 1952. Von Neumann architecture

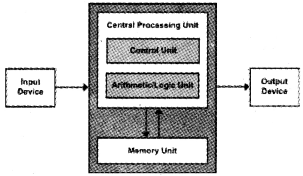

The mathematician John Von Neumann designed a computer structure that structure is in use nowadays. Von Neumann structure consists of a central processing unit(CPU), Memory unit, Input and Output unit. The CPU consists of arithmetic logical unit(ALU) and control unit(CU).

The instructions are stored in the memory and follows the “Stored Program Concept”. Colossus is the secret code breaking computer developed by a British engineer Tommy Flowers in 1943 to decode German messages.

Second generation computers (1956 -1963):

Transistors, instead of Vacuum tubes, were used in 2nd generation computers hence size became smaller, less expensive, less electricity consumption and heat emission and more powerful and faster.

A team contained John Bardeen, Walter Brattain and William Shockley developed this computer at Bell Laboratories. In this generation onwards the concept of programming language was developed and used magnetic core (primary) memory and magnetic disk(secondary) memory.

These computers used high level languages(high level language means English like statements are used)like FORTRAN (Formula translation) and COBOL(Common Business Oriented Language). The popular computers were IBM 1401 and 1620.

Third generation computers (1964 – 1971):

Integrated Circuits(IC’s) were used. IC’s or silicon chips were developed by Jack Kilby, an engineer in Texas Instruments. It reduced the size again and increased the speed and efficiency. The high level language BASIC(Beginners All purpose Symbolic Instruction Code) was developed during this period.

The popular computers were IBM 360 and 370. Due to its simplicity and cheapness more people were used. The number of transistors on IC’s doubles approximately every two years. This law is called Moore’s Law, it is named after Gordon E Moore. It is an observation and not a physical or natural law.

Fourth generation computers (1971 onwards):

Microprocessors are used hence computers are called microcomputers. Microprocessor is a single chip which contains Large Scale of IC’s(LSI) like transistors, capacitors, resistors,etc due to this a CPU can place on a single chip. Later LSI were replaced by Very Large Scale Integrated Circuits(VLSI). The popular computers are IBM PC and Apple II.

Fifth generation computers (future):

Fifth generation computers are based on Artificial Intelligence(AI). Al is the ability to act as human intelligence like speech recognition, face recognition, robotic vision and movement etc. The most common Al programming language are LISP and Prolog.

Evolution of computing:

Computing machines are used for processing or calculating data, storing and displaying information. In 1940’s computer were used only for single tasks like a calculator. But nowadays computer is capable of doing multiple tasks at a time.

The “Stored Program Concept” is the revolutionary innovation by John Von Neumann helped storing data and information in memory. A program is a collection of instructions for executing a specific job or task.

Augusta Ada Lowelace: She was the Countess of Lowelace and she was also a mathematician and writer. She is considered as the first lady computer programmer.

Programming languages:

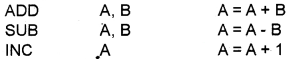

The instructions to the computer are written in different languages. They are Low Level Language(Machine language), Assembly Language(Middle level language) and High Level Language(HLL).

In Machine Language 0’s and 1 ’s are used to write program. It is very difficult but this is the only language which is understood by the computer. In assembly language mnemonics (codes) are used to write programs

Electronic Delay Storage Automatic Calculator(EDSAC) built during 1949 was the first to use assembly language. In HLL English like statements are used to write programs. A-0 programming language developed by Dr. Grace Hopper, in 1952, for UNIVAC-I is the first HLL.

A team lead by John Backus developed FORTRAN @IBM for IBM 704 computer and ‘Lisp’ developed by Tim Hart and Mike Levin at Massachusetts Institute of Technology. The other HLLs are C, C++, COBOL, PASCAL, VB, Java etc. HLL is very easy and can be easily understood by the human being.

Usually programmers prefer HLL to write programs because of its simplicity. But computer understands only machine language. So there is a translation needed. The program which perform this job are language processors.

Algorithm and computer programs:

The step-by-step procedure to solve a problem is known as algorithm. It comes from the name of a famous Arab mathematician Abu Jafer Mohammed Ibn Musaa Al-Khowarizmi, The last part of his name Al-Khowarizmi was corrected to algorithm.

Theory of computing:

It deals with how efficiently problems can be solved by algorithm and computation. The study of the effectiveness of computation is based upon a mathematical abstraction of computers is called a model of computation, the most commonly used model is Turing Machine named after the famous computer scientist Alan Turing.

1. Contribution of Alan Turing:

He was a British mathematician, logician, cryptographer and computer scientist. He introduced the concept of algorithm and computing with the help of his invention Turing Machine.

He asked the question Can machines think’ led the foundation for the studies related to the computing machinery and intelligence. Because of these contributions he is considered as the Father of Modern Computer Science as well as Artificial Intelligence.

2. Turing Machine:

In 1936 Alan Turing introduced a machine, called Turing Machine. A Turing machine is a hypothetical device that manipulates symbols on a strip of tape according to a table of rules. This tape acts like the memory in a computer. The tape contains cells which starts with blank and may contain 0 or 1.

So it is called a 3 Symbol Turing Machine. The machine can read and write, one cell at a time, using a tape head and move the tape left or right by one cell so that the machine can read and edit the symbol in the neighbouring cells. The action of a Turing machine is determined by

- the current state of the machine

- the symbol in the cell currently being scanned by the head and

- a table of transition rules, which acts as the program.

3. Turing Test:

The Turing test is a test of a machine’s ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human. The test involves a human judge engages in natural language conversations with a human and a machine designed to generate performance indistinguishable from that of a human being.

All participants are separated from one another. If the judge cannot reliably tell the machine from the human, the machine is said to have passed the test. The test does not check the ability to give the correct answer to questions; it checks how closely the answer resembles typical human answers. Turing predicted that by 2000 computer would pass the test.